Shift security left with AWS Config

No-one likes a telling off from the security team, and we can’t rely on good will and experience to keep our infrastructure secure: we have to make comprehensive guardrails. One of the services commonly used to achieve that is AWS Config.

What is AWS Config?

NB: if you’re playing around with AWS Config in a lab environment, please be aware of the pricing. It’s not free and I spent around ~£20 deploying a realistic set of rules to my lab environment (~114 rules, ~7 AWS accounts) and leaving them running for a fortnight.

AWS Config lets you define rules that check your resources are built against best practices - both AWS’ and those of your own organization. Example rules include:

- Checking an S3 bucket has a public access block enabled.

- Checking a DynamoDB table has Point-in-Time-Recovery (PITR) enabled.

- Checking an IAM user has MFA enabled.

The full list is in the docs: List of AWS Config Managed Rules

How are AWS Config rules defined?

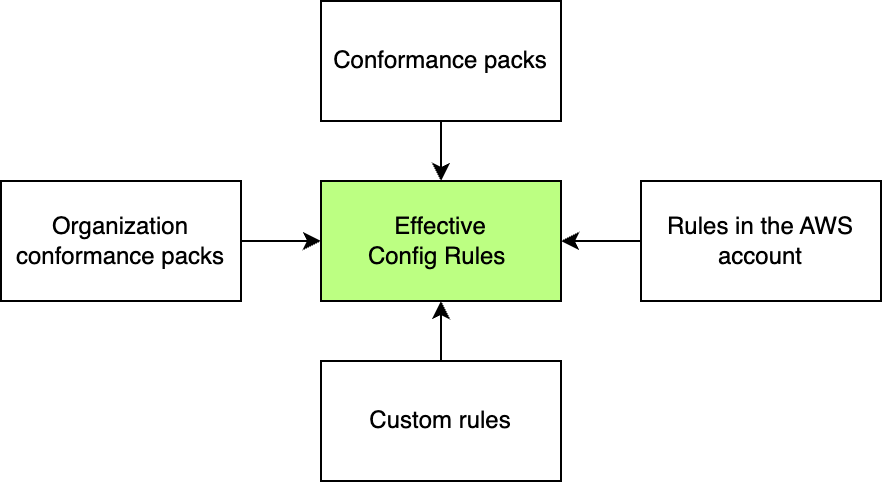

There are a number of sources for Config rules. You might define a “conformance pack”, a YAML-based template for a set of rules and their configuration. You could also define an organization conformance pack in your management account and deploy it to all member accounts. Some AWS security services also manage the creation of rules, e.g. the “security standards” that are part of Security Hub. These rule sources can be combined with rules deployed to individual accounts, for example if a workload has stricter compliance needs than the rest of your organization.

Where do AWS Config reports go?

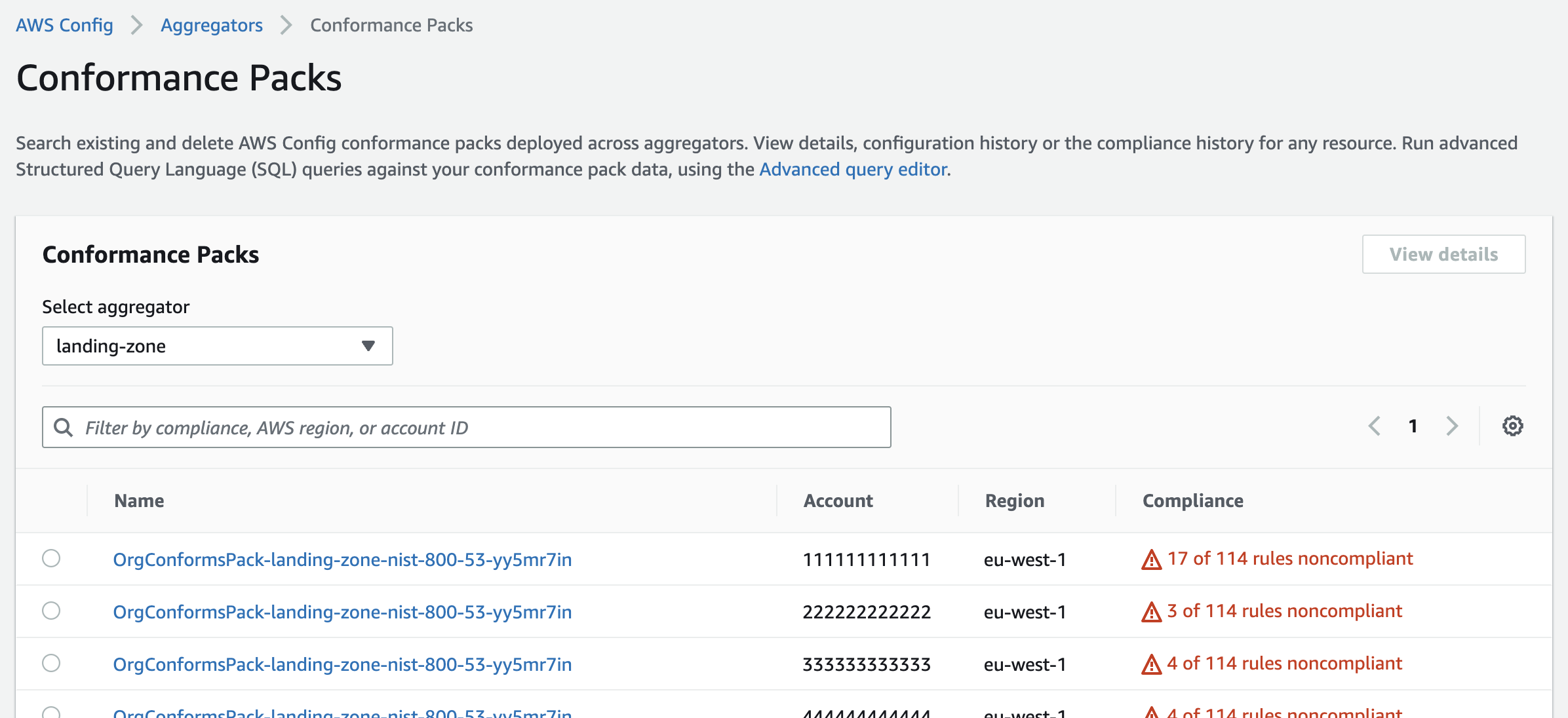

One of the more powerful features of AWS Config is being able to aggregate all findings into a central account in your AWS organization. We can centrally deploy rules, and then have each account report back with non-compliant infrastructure.

So far, so good!

Flow and fast feedback

We’ve had a whistle-stop tour of what Config rules are, how they’re defined, and how reporting is done, but how does that help us as a human building infrastructure? We’re interested in keeping flow with our current piece of work, not on fancy dashboards in a far away account. How can we ensure our infrastructure meets the required standards without an e-mail arriving from another team two weeks after we made it?

Developer-centric tooling

One option we have is to embed a tool like terraform-compliance or cdk-nag in our build pipeline. This is a big help, but there’s some effort required to keep the rules of those tools in-sync with the Config rules to our AWS account.

Feedback in the Pull Request

You can love or hate Pull Requests, but many teams use them as a way to prepare work before it’s checked in to their main branch. What if we could get feedback on our infrastructure before we’ve even hit the big green merge button?

Let’s make it happen!

PS: if you want to skip straight to the code, checkout jSherz/shift-security-left-aws-config on GitHub.

A quick segue into tags

This solution will rely on tags that indicate which project the infrastructure resources are for, and which git branch they’re deployed from. I really hope you’ve already got a tagging standard defined in your organization, but if not there really is no time like the present to start.

No strategy? “Defining and publishing a tagging schema” in the AWS docs is a great place to start, and you’ll notice that the tags below follow a similar standard.

We’re going to use two tags for our resources:

| Tag name | Example value | Purpose |

|---|---|---|

| jsherz-com:workload:project | git@github.com:jSherz/shift-security-left-aws-config.git | Identifies which GitHub project contains this infrastructure. |

| jsherz-com:workload:ref | feature/my-cool-thing | Helps us find the right Pull Request for this infrastructure. |

The names aren’t important - you can customise the values in the code for this solution - but we want to be able to quickly identify the right project that contains the Infrastructure of Code (IaC) for the resources we build.

Responding when resources fail compliance checks

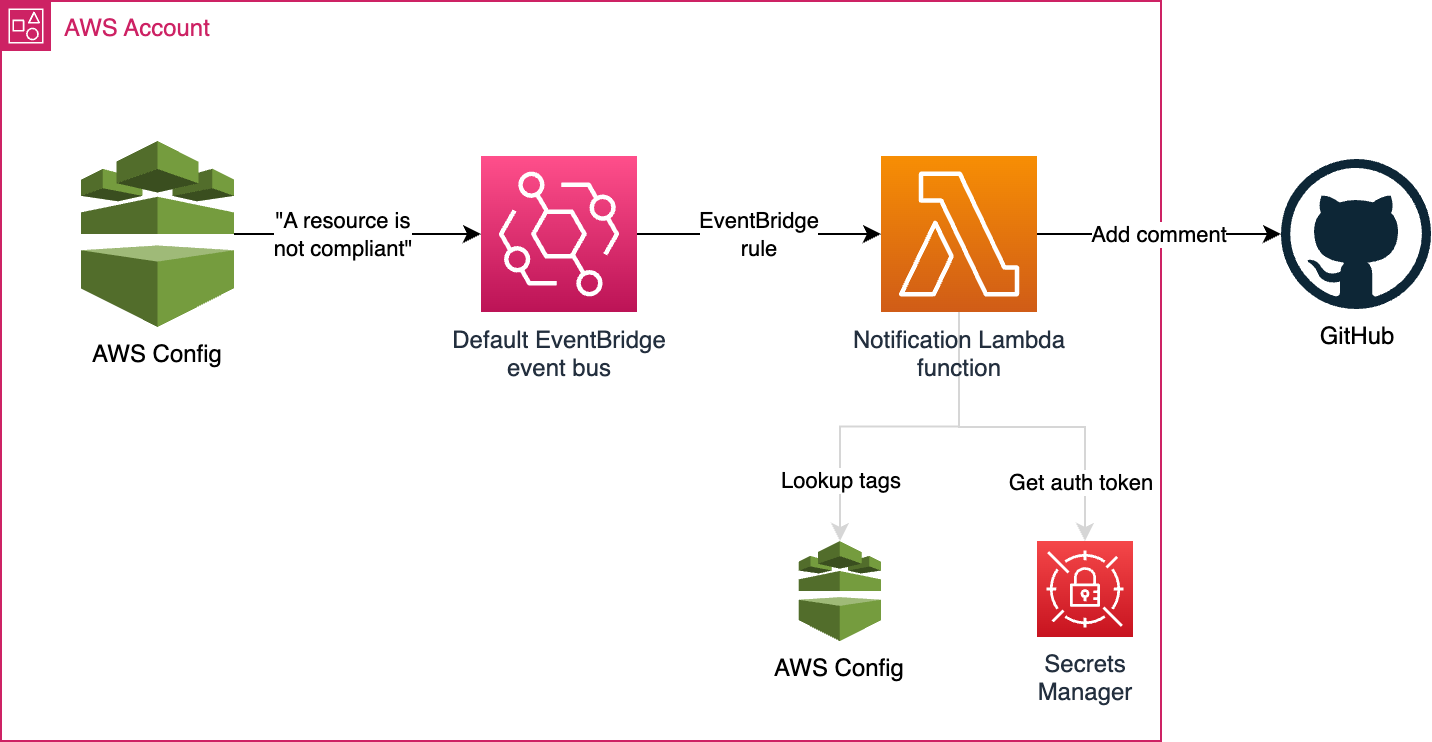

We want to give a user feedback ASAP when their resources fail a compliance check. We’ll do this by listening for notifications from AWS Config and then triggering a Lambda function which can add a GitHub Pull Request comment. It’s not as fast as the in-editor feedback you’d get with a compiler/linter, or the in-pipeline feedback you’d get with a tool like terraform-compliance, but it gives feedback that’s accurate and up-to-date with our organization-wide standards.

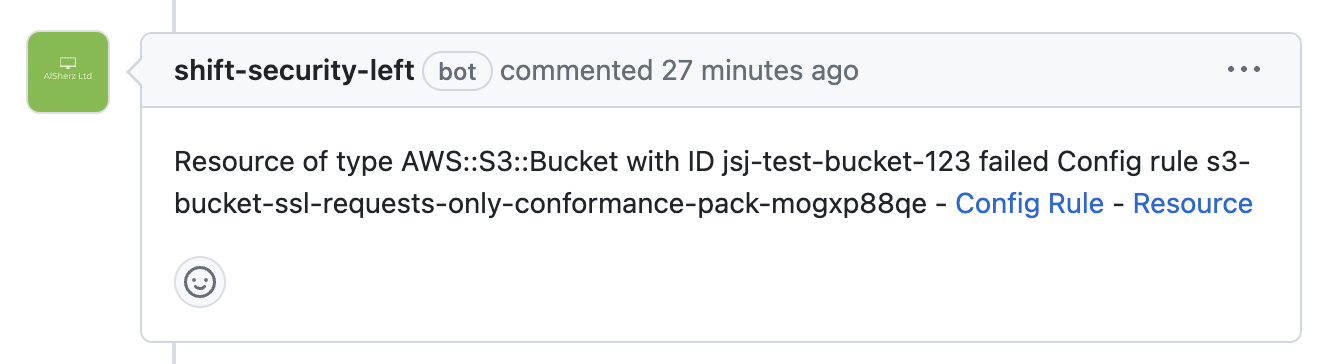

Here’s what that feedback will look like:

If the user can’t identify what the rule relates to by name, they can click the link to see its description in the AWS console. Additionally, they can view the resources itself in the Config portal to find out any other rules that have failed.

Here’s what the architecture that powers that looks like:

Config sends an event to the default EventBridge event bus with the following format:

{

"version": "0",

"id": "33a1416f-68e6-6fe1-6313-7074141badb9",

"detail-type": "Config Rules Compliance Change",

"source": "aws.config",

"account": "111111111111",

"time": "2023-05-11T17:48:07Z",

"region": "eu-west-1",

"resources": [],

"detail": {

"resourceId": "jsj-bucket-777",

"awsRegion": "eu-west-1",

"awsAccountId": "111111111111",

"configRuleName": "s3-bucket-logging-enabled-conformance-pack-mogxp88qe",

"recordVersion": "1.0",

"configRuleARN": "arn:aws:config:eu-west-1:111111111111:config-rule/aws-service-rule/config-conforms.amazonaws.com/config-rule-latfki",

"messageType": "ComplianceChangeNotification",

"newEvaluationResult": {

"evaluationResultIdentifier": {

"evaluationResultQualifier": {

"configRuleName": "s3-bucket-logging-enabled-conformance-pack-mogxp88qe",

"resourceType": "AWS::S3::Bucket",

"resourceId": "jsj-bucket-777",

"evaluationMode": "DETECTIVE"

},

"orderingTimestamp": "2023-05-11T17:47:56.716Z"

},

"complianceType": "NON_COMPLIANT",

"resultRecordedTime": "2023-05-11T17:48:06.945Z",

"configRuleInvokedTime": "2023-05-11T17:48:06.801Z"

},

"notificationCreationTime": "2023-05-11T17:48:07.470Z",

"resourceType": "AWS::S3::Bucket"

}

}

My first instinct when building this project was to use the Resource Groups Tagging API to lookup tags, but this isn’t supported by all AWS services and the event above doesn’t contain the resource ARN. Instead, we can use the query feature of Config to find the tags regardless of the service it originated from:

export class ConfigService implements IConfigService {

constructor(private readonly configServiceClient: ConfigServiceClient) {

}

async lookupResourceTags(

resourceType: string,

resourceId: string,

): Promise<Record<string, string>> {

const response = await this.configServiceClient.send(

new SelectResourceConfigCommand({

Expression: `

SELECT

tags

WHERE

resourceType = '${resourceType}'

AND resourceId = '${resourceId}'

`,

Limit: 100,

}),

);

/*

If we got a query result, turn the list of tags into an object

of tag keys -> tag values.

*/

if (response.Results && response.Results.length === 1) {

return (

JSON.parse(response.Results[0]) as ILookupResourceTagsQueryResult

).tags.reduce((out, curr) => {

out[curr.key] = curr.value;

return out;

}, {} as Record<string, string>);

} else {

return {};

}

}

}

Once we’ve got the tags, we can use the Octokit library for the GitHub API to find a Pull Request for that project/branch combination:

const project = tags[`${companyIdentifier}:workload:project`];

const ref = tags[`${companyIdentifier}:workload:ref`];

if (ref === "main") {

logger.info("not for a pull request - skipping", {ref});

return;

}

const [owner, repo] = project

.replace("git@github.com:", "")

.replace(".git", "")

.split("/");

const relevantPullsResponse = await octokit.request(

"GET /repos/{owner}/{repo}/pulls?state={state}",

{

owner,

repo,

state: "open",

head: ref,

headers: {

"X-GitHub-Api-Version": "2022-11-28",

},

},

);

If one is found, we can add a comment to the PR:

if (relevantPulls.length === 1) {

const issueNumber = relevantPulls[0].number;

await octokit.request(

"POST /repos/{owner}/{repo}/issues/{issue_number}/comments",

{

owner,

repo,

issue_number: issueNumber,

body: comment,

headers: {

"X-GitHub-Api-Version": "2022-11-28",

},

},

);

}

Putting it all together: the GitHub App

If you want to get stuck in to the code behind this solution, you can view the jSherz/shift-security-left-aws-config project on GitHub.

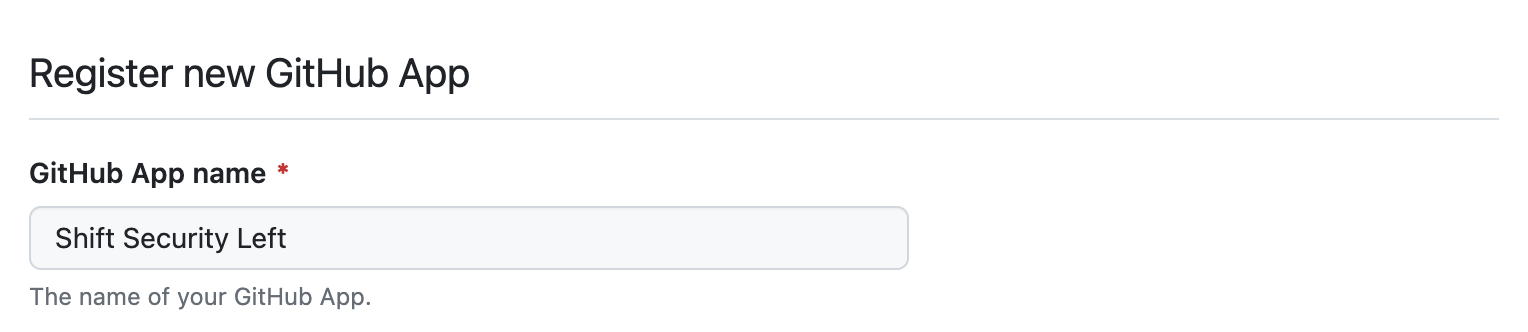

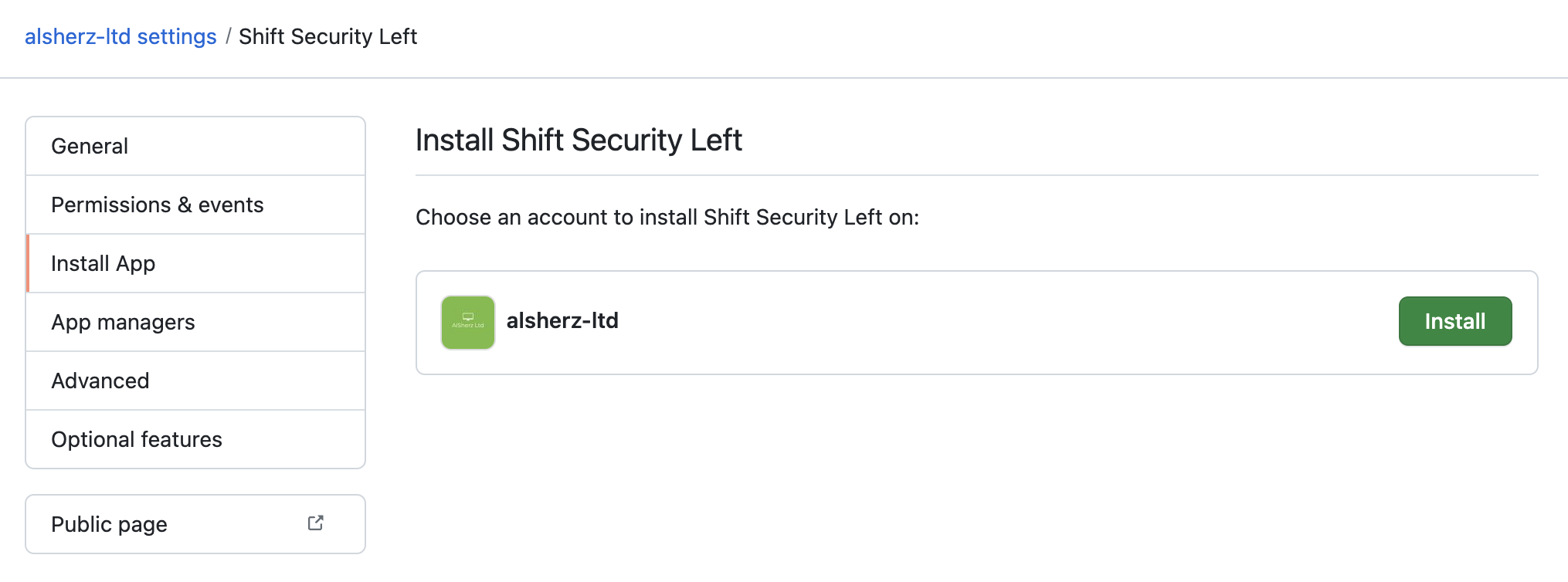

Let’s setup a GitHub App in our GitHub organization. It’ll be private and thus not available for any user/organization on GitHub to install.

-

Create the app in your GitHub organization:

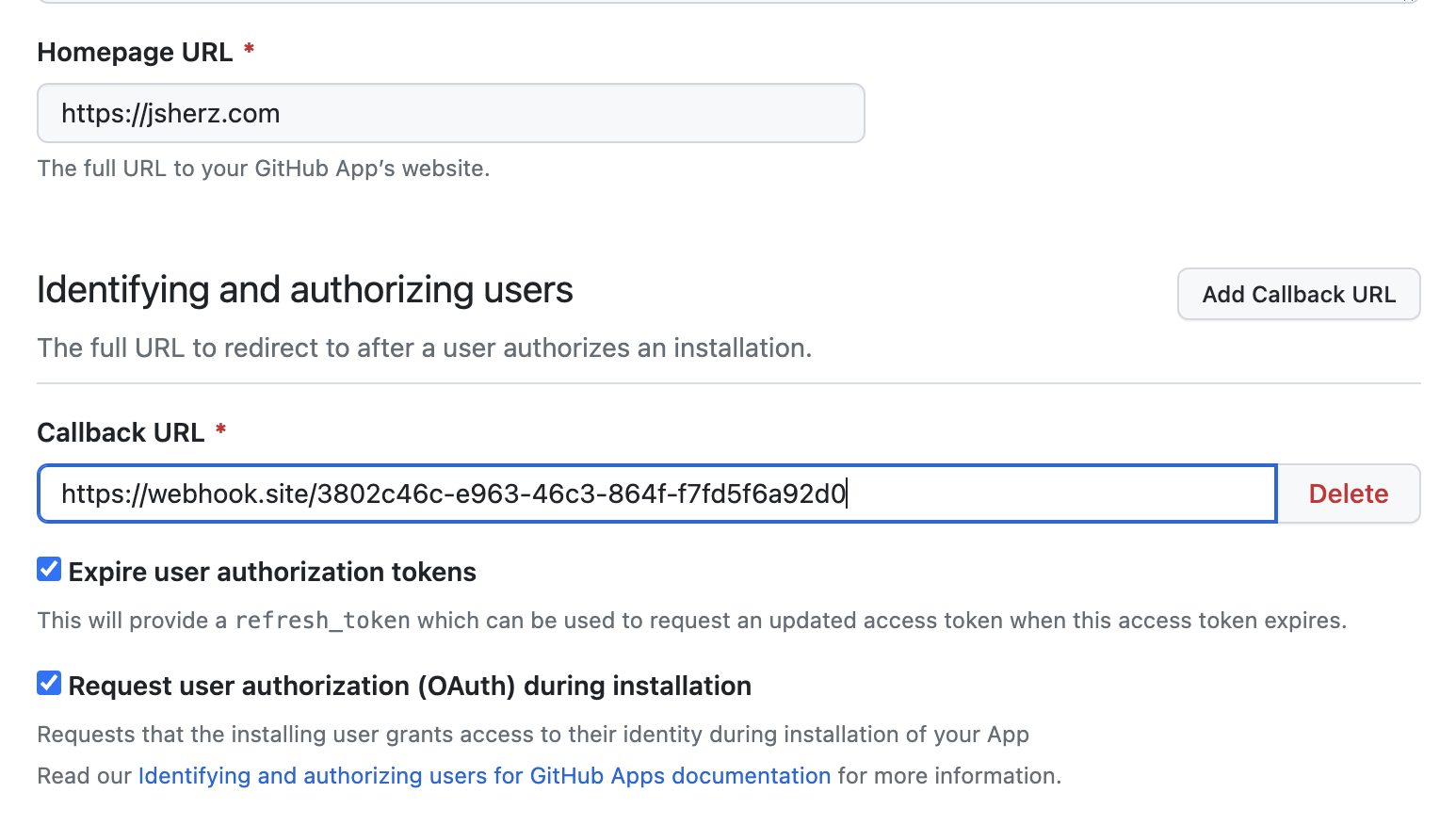

-

Use a service that can capture requests, e.g. webhook.site to listen for the app being installed in step 4:

-

Allow read/write access of issues and pull requests:

-

Install the application:

-

Note down the installation ID - we’ll need it later.

Putting it all together - AWS infrastructure

With the app setup, we can complete the final step of deploying the

jSherz/shift-security-left-aws-config project. I’ll leave you to the

instructions in that README to get the Lambda function deployed and to

configure GitHub API access. It’s (almost) as simple as a terraform apply.

What did we achieve?

With that solution in place, we can tag the infrastructure deployed for our Pull Requests and have near-instant feedback that helps us understand if we’re meeting our organization’s compliance needs. Compliance isn’t sexy, but staying in a flow state and getting feedback on the spot sure is.

At least to me anyway.

Security is everyone’s job, and now we’ve put the right tools into the hands of the people building the infrastructure. They get to fix any problems before the IaC code has even landed on the main/trunk branch.

Further reading

We’ve scratched the surface of a few important services in this post. Here’s some ways to deepen your understanding:

-

Find out which AWS Config rules your organization deploys, and how they’re managed (e.g. organization conformance packs vs Security Hub standards vs deployed into each account).

-

Evaluate your current tagging strategy. Does it let you easily find the source of a piece of infrastructure? How about the team that’s responsible for it? Are Cost Allocation Tags configured to let you pinpoint who’s spending what?

-

If Config seems prohibitively expensive - even in lab use cases - what other tools are available? There are third-parties that fill the same niche, and even save you some pennies!